Mastering the Sliding Window Rate Limiting Algorithm

- 2116 words

- 10 minutes read

- Updated on 25 Apr 2024

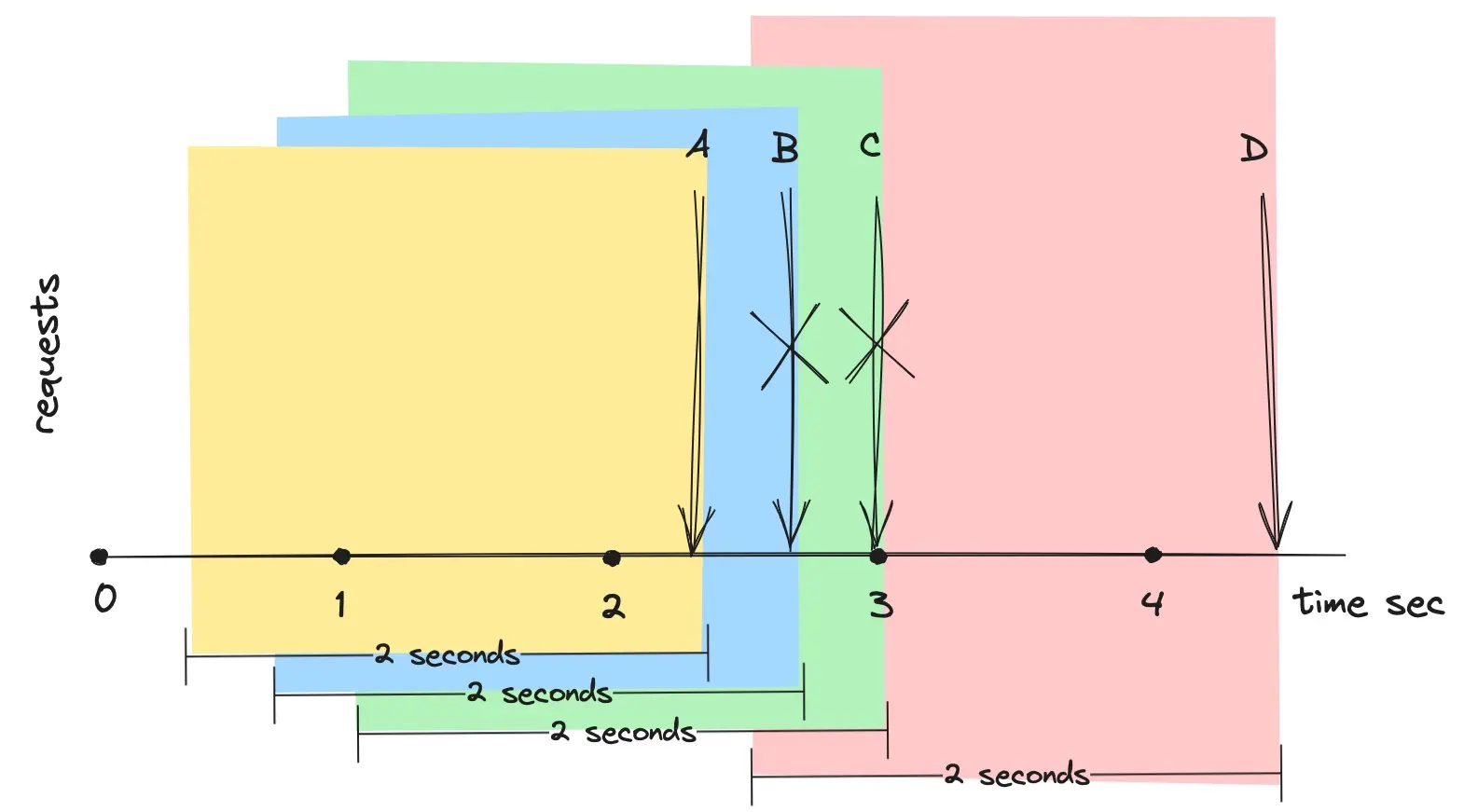

The Sliding Window Rate Limiting is similar to the Fixed Window Rate Limiting but differs in its approach to managing the time window. Unlike the fixed time window, which is static, the sliding window moves continuously along the request timeline. It monitors requests within the current window, rejecting additional requests once the window’s limit is reached. As the window slides forward on the timeline, older requests that fall outside the window are discarded, creating space for new requests.

In the figure above, the rate limit is one request per two seconds. Request A is processed successfully. When Request B arrives, the window moves right, using B’s current time as the end of the rolling window, but B is rejected because it falls within the same two-second window as A. Request C, arriving later, is also throttled for including A within its window. Request D, however, arrives outside A’s two-second window and is thus processed successfully.

How to Implement a Sliding Window Log Rate Limiter?

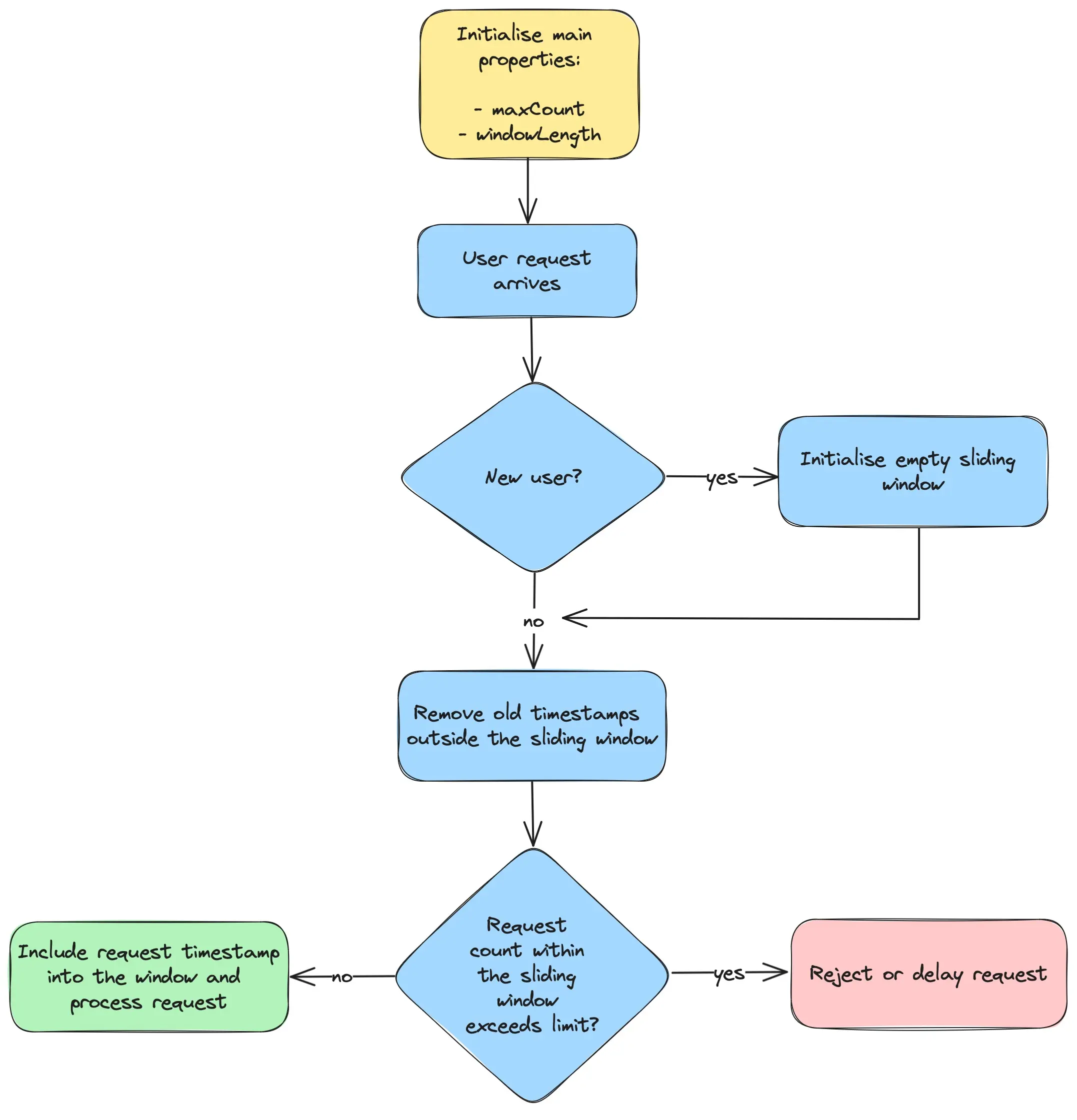

This is a classic implementation which is commonly known as the Sliding Window Log Rate Limiter. To control the rate it initializes two key properties: the maximum number of requests allowed per time window and the window’s duration. As shown in the flow diagram below, a new sliding window is created once for each new user. This window tracks the timestamps of the user’s requests. Upon the arrival of a new request, any old timestamps outside the sliding window are discarded to accommodate new ones. If the number of requests within the window exceeds the set limit, the incoming request is throttled. Otherwise, it is added to the window and processed successfully.

To track user-specific request timestamps, you can use a dictionary with the user ID as the key and a deque of request timestamps as the value, represented as Map<String, Deque<Long>>. The deque functions as a sliding window, where old timestamps are discarded and new ones are added as the window slides forward. Below is a Java implementation of the Sliding Window Log Rate Limiting algorithm:

import java.time.Clock;

import java.util.Deque;

import java.util.HashMap;

import java.util.LinkedList;

import java.util.Map;

public class SlidingWindowLogRateLimiter {

private final int maxCount;

private final long windowLengthMillis;

private final Clock clock;

private final Map<String, Deque<Long>> userSlidingWindow = new HashMap<>();

SlidingWindowLogRateLimiter(int maxCount, long windowLengthMillis, Clock clock) {

this.maxCount = maxCount;

this.windowLengthMillis = windowLengthMillis;

this.clock = clock;

}

boolean allowed(String userId) {

long now = clock.millis();

// Initialize an empty sliding window for new users,

// or retrieve the existing window.

Deque<Long> slidingWindow = userSlidingWindow

.computeIfAbsent(userId, k -> new LinkedList<>());

// Remove timestamps outside the current sliding window.

while (!slidingWindow.isEmpty()

&& slidingWindow.getFirst() + windowLengthMillis < now) {

slidingWindow.removeFirst();

}

// Check if the rate limit is exceeded.

// If so, reject the request; otherwise, add the current

// request's timestamp to the window and allow it.

if (slidingWindow.size() >= maxCount) {

return false;

} else {

slidingWindow.addLast(now);

return true;

}

}

}

The allowed method determines if a user’s request falls within the set limit. A new sliding window is created for each new user. Timestamps outside the window are removed, and new request timestamps are added to the window. Once the window reaches its maximum request capacity, further requests are rejected until the window advances and old timestamps are discarded.

The following Java test demonstrates the usage of this limiter:

import org.junit.jupiter.api.Test;

import java.time.Clock;

import static org.junit.jupiter.api.Assertions.assertFalse;

import static org.junit.jupiter.api.Assertions.assertTrue;

import static org.mockito.Mockito.mock;

import static org.mockito.Mockito.when;

public class SlidingWindowLogRateLimiterTest {

private static final String BOB = "Bob";

@Test

void allowed_burstyTraffic_acceptsAllRequestsWithinRateLimitThresholds() {

Clock clock = mock(Clock.class);

when(clock.millis()).thenReturn(0L, 999L, 1000L,

1001L, 1002L, 1999L, 2000L);

SlidingWindowLogRateLimiter limiter

= new SlidingWindowLogRateLimiter(2, 1000, clock);

// 0 seconds passed

assertTrue(limiter.allowed(BOB),

"Bob's request 1 at timestamp=0 must pass");

assertTrue(limiter.allowed(BOB),

"Bob's request 2 at timestamp=999 must pass");

// 1 second passed

assertFalse(limiter.allowed(BOB),

"Bob's request 3 at timestamp=1000 must not be allowed");

assertTrue(limiter.allowed(BOB),

"Bob's request 4 at timestamp=1001 must pass, because request 1" +

" at timestamp=0 is outside the current sliding window [1; 1001]");

assertFalse(limiter.allowed(BOB),

"Bob's request 5 at timestamp=1002 must not be allowed");

assertFalse(limiter.allowed(BOB),

"Bob's request 6 at timestamp=1999 must not be allowed");

// 2 seconds passed

assertTrue(limiter.allowed(BOB),

"Bob's request 7 at timestamp=2000 must pass, because request 2" +

" at timestamp=999 is outside the current sliding window [1000; 2000]");

}

}

This test simulates scenarios for bursty traffic to ensure the requests at the window’s border are handled correctly. More tests and the latest source code are available in the rate-limiting GitHub repository.

For additional implementations and insights, you can explore open-source resources such as:

How to Implement a Memory-Optimized Sliding Window Counter Rate Limiter?

The classic implementation above can be resource-intensive due to the need to store numerous request timestamps and perform complex calculations across distributed servers. This makes the Sliding Window Log Rate Limiting approach less scalable for handling large traffic bursts.

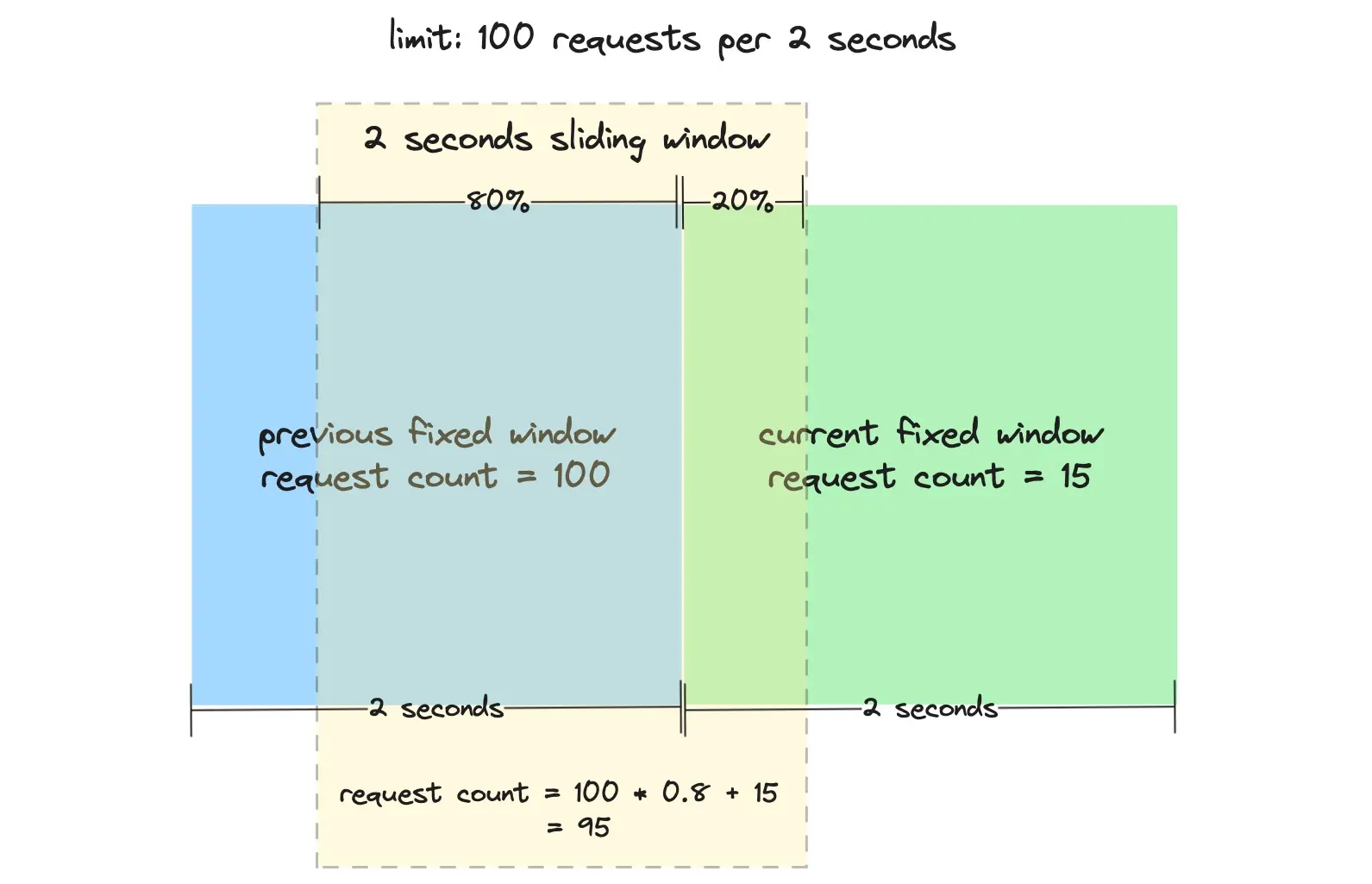

The optimized implementation, commonly referred to as the Sliding Window Counter Rate Limiter, aims to minimize memory usage by merging the low processing cost of the Fixed Window algorithm with the enhanced boundary conditions of the Sliding Window Log approach. It keeps a request counter for the previous and current fixed windows and leverages information from the previous window to calculate the available limit at the current timestamp.

Consider a scenario where you are allowed to make 100 requests every 2 seconds. Suppose you made 100 requests in the first 2 seconds and then made 15 requests within the first 400 ms (which is 20% of a new fixed window) of the next period. With the current window 20% elapsed, the count from the previous window is weighted by 80%, as shown in Figure 3. As a result, the current request count is calculated as follows:

count = 100 * 0.8 + 15 = 95 requests

100 - number of requests in the previous fixed window

0.8 - weight of the previous window (since the sliding window covers 20%

of the current fixed window and 80% of the previous one)

15 - number of requests in the current fixed window

Based on the limit set, you can still make 5 more requests.

This approach, which requires tracking less data per user, is more scalable across large clusters. Below is a Java implementation of the Sliding Window Counter Rate Limiter:

import java.time.Clock;

import java.util.HashMap;

import java.util.Map;

public class SlidingWindowCountRateLimiter {

private final int maxCount;

private final long windowLengthMillis;

private final Clock clock;

private final Map<String, SlidingWindow> userSlidingWindow = new HashMap<>();

SlidingWindowCountRateLimiter(int maxCount, long windowLengthMillis, Clock clock) {

this.maxCount = maxCount;

this.windowLengthMillis = windowLengthMillis;

this.clock = clock;

}

boolean allowed(String userId) {

long now = clock.millis();

// Initialize an empty sliding window for new users or retrieve existing one.

SlidingWindow slidingWindow = userSlidingWindow.computeIfAbsent(userId,

k -> new SlidingWindow(new FixedWindow(now, 0),

new FixedWindow(now, 0)));

FixedWindow currentFixedWindow = slidingWindow.currentFixedWindow();

FixedWindow previousFixedWindow = slidingWindow.previousFixedWindow();

// Transition to a new fixed window when the current one expires.

if (currentFixedWindow.timestamp() + windowLengthMillis < now) {

previousFixedWindow = currentFixedWindow;

currentFixedWindow = new FixedWindow(now, 0);

userSlidingWindow.put(userId,

new SlidingWindow(previousFixedWindow, currentFixedWindow));

}

// Weight calculation for the previous window.

long slidingWindowStart = Math.max(0, now - windowLengthMillis);

long previousFixedWindowEnd =

previousFixedWindow.timestamp() + windowLengthMillis;

// Weight of the previous window based on overlap with the sliding window.

double previousFixedWindowWeight =

Math.max(0, previousFixedWindowEnd - slidingWindowStart)

/ (double) windowLengthMillis;

// Calculate total request count within the sliding window.

int count = (int) (previousFixedWindow.count()

* previousFixedWindowWeight

+ currentFixedWindow.count());

// Check if the request count within the sliding window exceeds the limit.

// If so, reject the request; otherwise, update the request count

// in the current fixed window and allow the request.

if (count >= maxCount) {

return false;

} else {

currentFixedWindow = new FixedWindow(currentFixedWindow.timestamp(),

currentFixedWindow.count() + 1);

userSlidingWindow.put(userId,

new SlidingWindow(previousFixedWindow, currentFixedWindow));

return true;

}

}

private record SlidingWindow(FixedWindow previousFixedWindow,

FixedWindow currentFixedWindow) { }

private record FixedWindow(long timestamp, int count) { }

}

The sliding window tracks both the current and previous fixed windows, updating timestamps and request counts as needed. When the current window expires, it becomes the previous window, and a new window starts with zero requests. The current request count within the sliding window is determined by the weight of the previous fixed window and the number of requests made within the current fixed window. Once the sliding window reaches its maximum request capacity, further requests are rejected until the window advances and the influence of the previous fixed window diminishes.

However, there are a few drawbacks to this optimized approach worth noting:

- It is more complex to implement, debug, and maintain.

- The requirement for the look-back window’s timestamp to be flexible rather than strict.

What Are the Pros and Cons of the Sliding Window Algorithm?

Advantages of the Sliding Window Algorithm

Bursty Traffic Handling: The sliding window approach provides a more evenly distributed control over traffic than the fixed window algorithm, reducing the likelihood of sudden spikes that could potentially overwhelm the system. It effectively handles bursts of traffic resulting the number of wrongly allowed requests to be very low.

Fairness: This rate-limiting algorithm avoids the starvation problem of the leaky bucket algorithm by prioritizing recent requests and giving more weight to activity within the current window. This helps identify and mitigate ongoing attacks while ensuring active users aren’t unfairly penalized for past bursts.

Real-Time Adaptability: The algorithm can adapt more dynamically to real-time changes in request volume, offering a more accurate reflection of current usage patterns compared to a fixed window approach.

Disadvantages of the Sliding Window Algorithm

Complexity: Compared to simpler approaches like the fixed window or even the token bucket, the sliding window algorithm, often implemented with Redis as a data store, can be more complex to implement correctly, especially in distributed systems where time synchronization between servers can be an issue.

Resource Intensive: Tracking requests within a continuously moving window demands more memory and complex data structures. Additionally, the computation is costly, as each request involves summing up the user’s previous requests, possibly across a server cluster. Consequently, this approach may not scale well enough to handle large traffic bursts or mitigate denial of service attacks.

Predictability: Users may find it more difficult to predict when their rate limit will reset compared to a fixed window approach, as the available capacity can fluctuate more significantly within any given period.

Common Use Cases for Sliding Window Rate Limiting

The sliding window algorithm’s primary advantage is its ability to provide a more consistent and smooth control over the rate of operations or requests. This is particularly beneficial in scenarios where avoiding bursts of activity at the beginning or end of a rate limit window is important for maintaining system stability and user experience.

This algorithm offers more flexibility than fixed window rate limiting, adapting well to traffic spikes, making it suitable for applications and API with fluctuating usage patterns. Cloudflare, in their post How we built rate limiting capable of scaling to millions of domains, shares insights from incorporating this algorithm into their system, highlighting its effectiveness in a real-time environment:

It is still very accurate, as an analysis on 400 million requests from 270,000 distinct sources shown:

- 0.003% of requests have been wrongly allowed or rate limited

- An average difference of 6% between real rate and the approximate rate

- 3 sources have been allowed despite generating traffic slightly above the threshold (false negatives), the actual rate was less than 15% above the threshold rate

- None of the mitigated sources was below the threshold (false positives)

Summary

The Sliding Window Rate Limiting algorithm offers a great solution for applications requiring nuanced control over request rates. Despite its complexity and the demands it places on system resources, its benefits in terms of traffic management, fairness, and adaptability make it a valuable tool for maintaining system stability and ensuring a positive user experience.

Frequently Asked Questions (FAQ)

Q: What Exactly Is a Sliding Window Rate Limiter Algorithm?

A: The sliding window rate limiter algorithm is a technique used to manage how frequently a user or client can access resources within a set time frame, ensuring equitable resource use and system stability.

Q: How Does Redis Facilitate Sliding Window Rate Limiting?

A: Redis is instrumental in sliding window rate limiting for its ability to quickly process and store request data, providing a scalable solution for tracking and enforcing request limits.

Q: What Advantages Does a Sliding Window Rate Limiter Offer for API Management?

A: A sliding window rate limiter ensures precise, dynamic control of API request rates, adjusting limits based on real-time traffic patterns to optimize system performance and resource distribution.