Machine Learning Mind Map: Essential Concepts and Foundations

- 1311 words

- 7 minutes read

- Updated on 25 Apr 2024

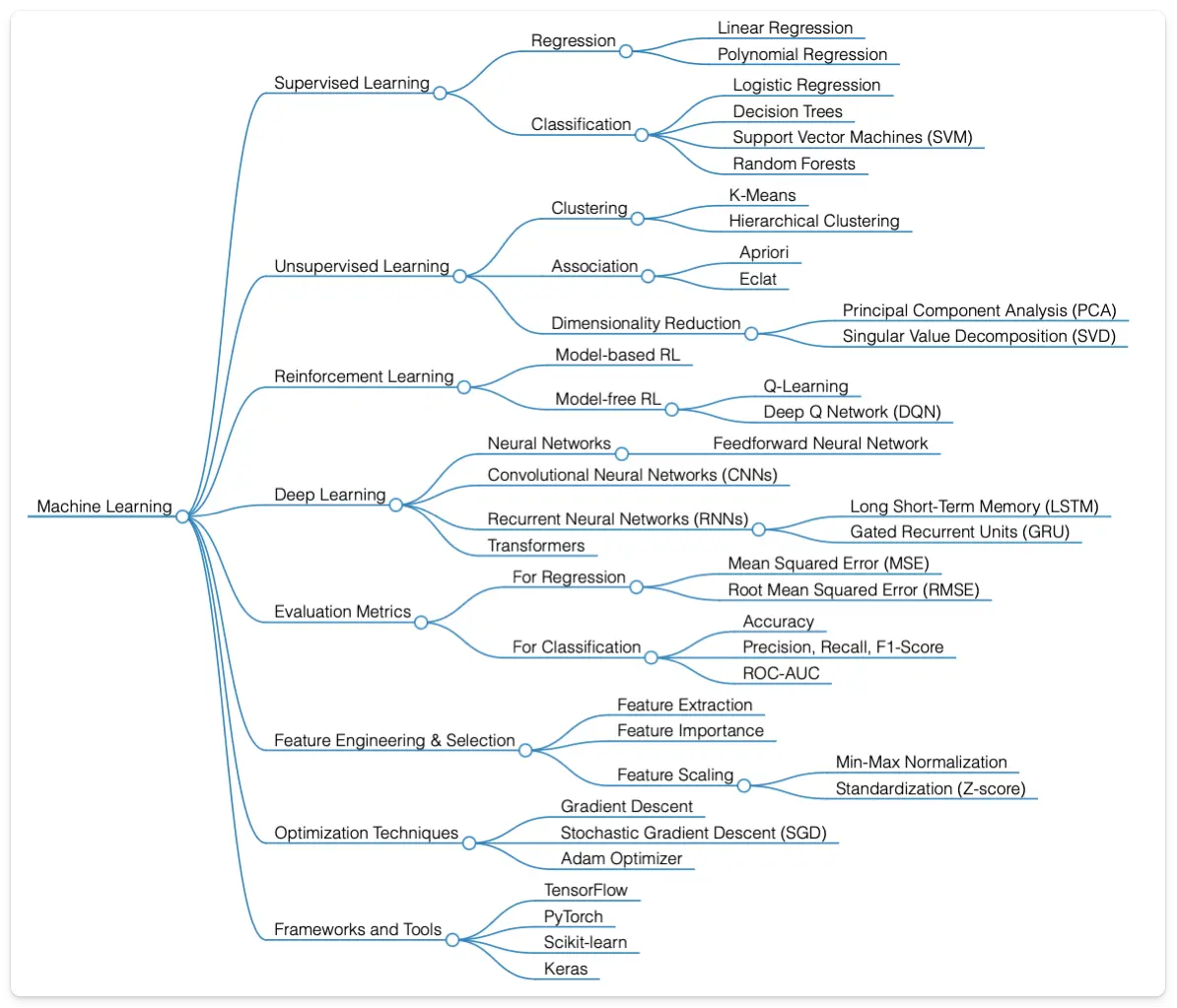

In the rapidly evolving world of technology, Machine Learning (ML) is a revolutionary force driving innovation across industries. From enhancing customer experiences to automating tedious tasks, ML’s impact is undeniable. Below is a Machine Learning mind map that illustrates the primary ML building blocks. This mind map is followed by a concise overview of the core concepts and techniques in Machine Learning, aiming to demystify this complex field.

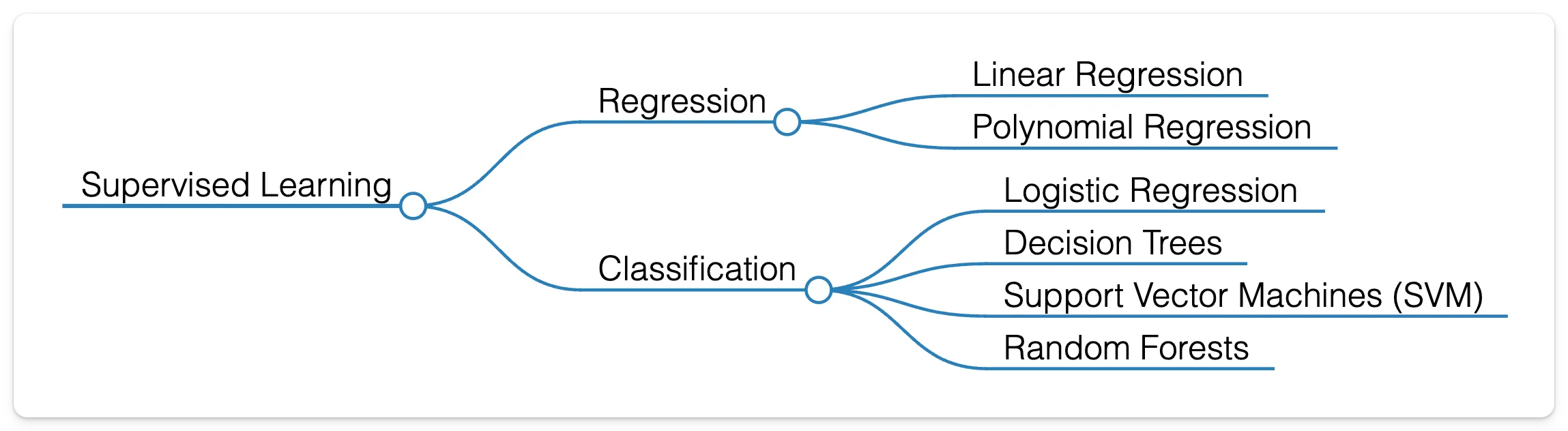

Supervised Learning: Teaching Computers to Predict

At the heart of ML lies Supervised Learning, a method where models learn to make predictions based on labelled data. Fundamental techniques include Regression and Classification.

Regression

Linear Regression and Polynomial Regression are techniques used to predict continuous values. For example, real estate companies can use Linear Regression to forecast house prices based on size, location, and amenities.

Meanwhile, agricultural researchers can use Polynomial Regression to predict crop yields based on various environmental factors. It is important to note that the relationships between these factors can be nonlinear.

Classification

Classification determines the category to which an observation belongs, utilizing methods like Logistic Regression, Decision Trees, Support Vector Machines (SVM), and Random Forests for various applications from spam detection to customer segmentation.

Logistic Regression can support medical diagnostic applications by classifying test results as positive or negative for a disease. Decision Trees are used in customer service to direct customer inquiries to the appropriate department based on the content of the inquiry. SVMs are instrumental in handwriting recognition, differentiating between different letters and numbers. Random Forests enhance predictive accuracy in banking to assess the risk levels of loan applicants.

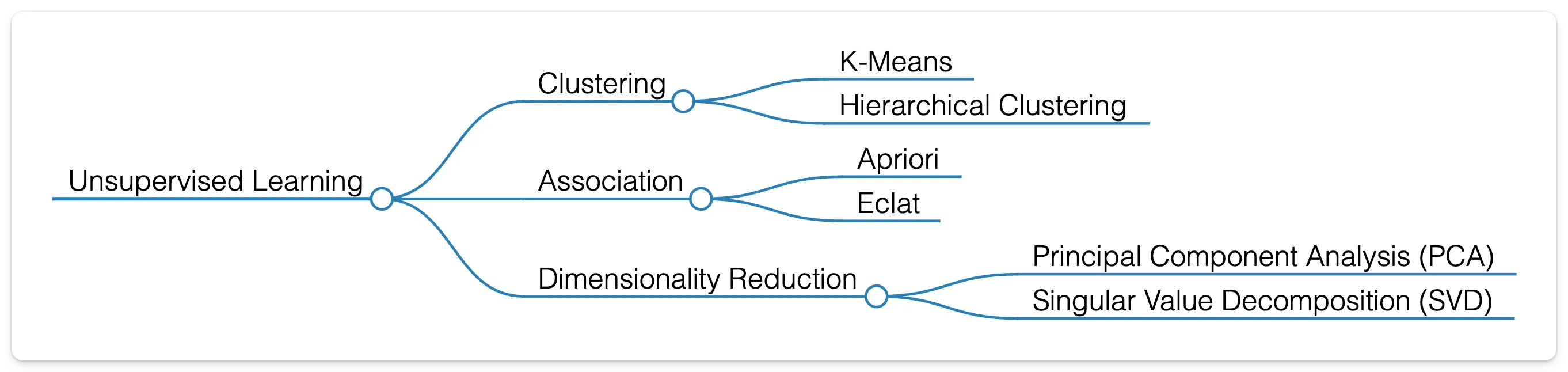

Unsupervised Learning: Discovering Hidden Patterns

Unsupervised Learning enables models to find structure in unlabeled data. Essential techniques include Clustering, Association, and Dimensionality Reduction.

Clustering

This approach involves grouping items that are similar to one another. K-Means and Hierarchical Clustering are two vital techniques for market segmentation and social network analysis. For instance, marketing teams can use K-Means to segment customers based on purchasing behaviour, enabling tailored marketing strategies for different groups. Hierarchical Clustering can be utilized in genomics to group genes with similar expression patterns, indicating functional relationships.

Association

This technique uncovers relationships between variables using the Apriori and Eclat algorithms. For example, the Apriori algorithm can reveal that bread and milk are frequently bought together in a grocery store, guiding the store to consider strategic product placements or bundled promotions to enhance sales.

Similarly, an online retailer using the Eclat algorithm finds that customers purchasing smartphones also buy cases and screen protectors. This allows the retailer to improve its recommendation system, suggesting these accessories to smartphone buyers, thereby boosting cross-selling opportunities.

Dimensionality Reduction

This approach simplifies data while preserving its essence through techniques like Principal Component Analysis (PCA) and Singular Value Decomposition (SVD), which are crucial for data visualization and noise reduction.

For example, finance widely uses PCA to simplify stock market data, identifying key patterns that drive market movements. This enables analysts to focus on the most influential stocks, making more informed investment decisions.

SVD finds practical application in natural language processing, specifically in semantic analysis. For instance, SVD processes large matrices of word occurrences in documents (term-document matrices) to identify latent semantic structures. This helps improve search engines’ ability to understand and match users’ query intent with relevant papers or articles, even when exact keywords are not used.

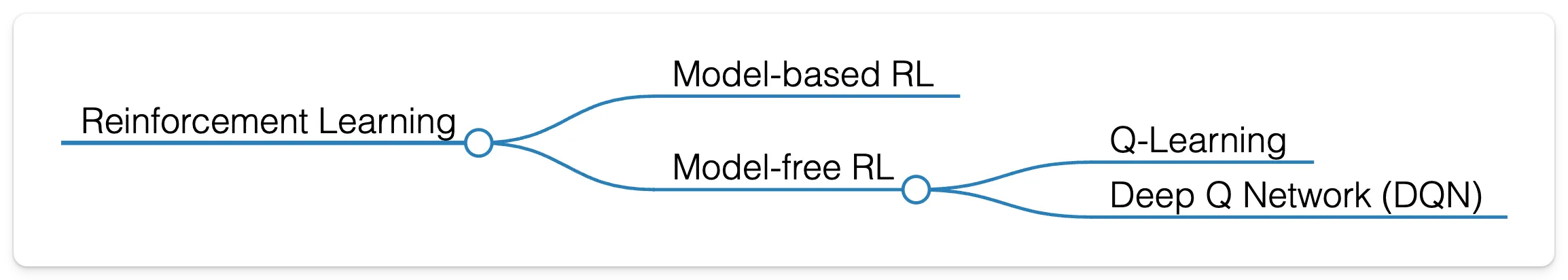

Reinforcement Learning: Learning Through Interaction

Reinforcement Learning (RL) enables models to learn how to make decisions via trial and error by interacting with an environment and receiving rewards as feedback. Model-based RL finds applications in robotics, aiding robots in learning to navigate environments. Model-free RL, including Q-Learning and Deep Q Networks (DQN), excels in game AI and teaches systems to master complex games like Go or chess without human input.

Deep Learning: Mimicking Human Cognition

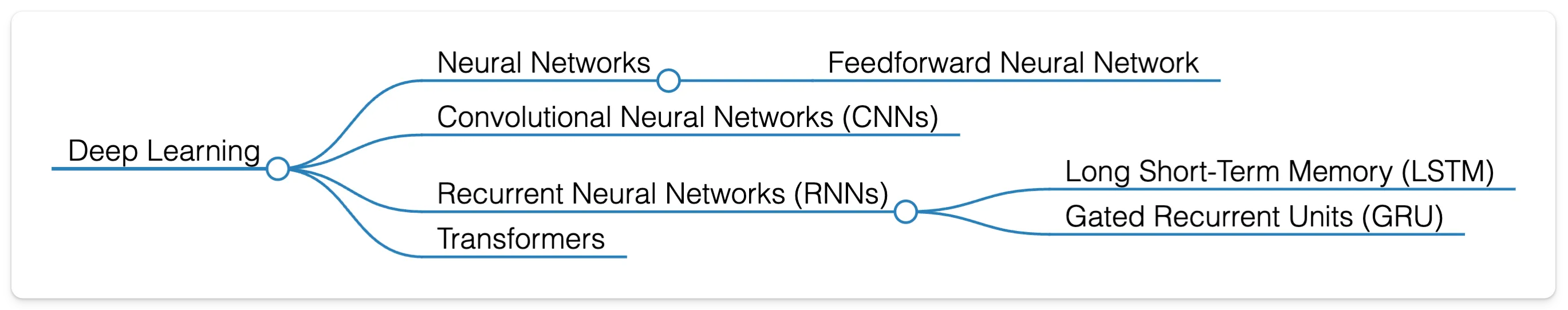

Deep Learning, a subset of ML, excels at processing large and complex datasets through neural networks and their specialized forms: Convolutional Neural Networks (CNNs) for image processing, Recurrent Neural Networks (RNNs), including Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU), for sequential data, and Transformers, which have revolutionized natural language processing.

Neural networks form the foundation of voice recognition systems used by virtual assistants. CNNs have transformed medical imaging by improving disease diagnosis from scans. RNNs, especially LSTM networks, play a crucial role in developing predictive text features, enabling smartphones to suggest subsequent words as users type. Transformers have set new benchmarks in machine translation services, making real-time, cross-language communication more accessible.

Critical Components of Machine Learning

No ML model can be effective without a solid foundation in the critical components that support Machine Learning algorithms. These components include Evaluation Metrics, Feature Engineering & Selection, and Optimization Techniques, each playing a crucial role in developing and fine-tuning ML models.

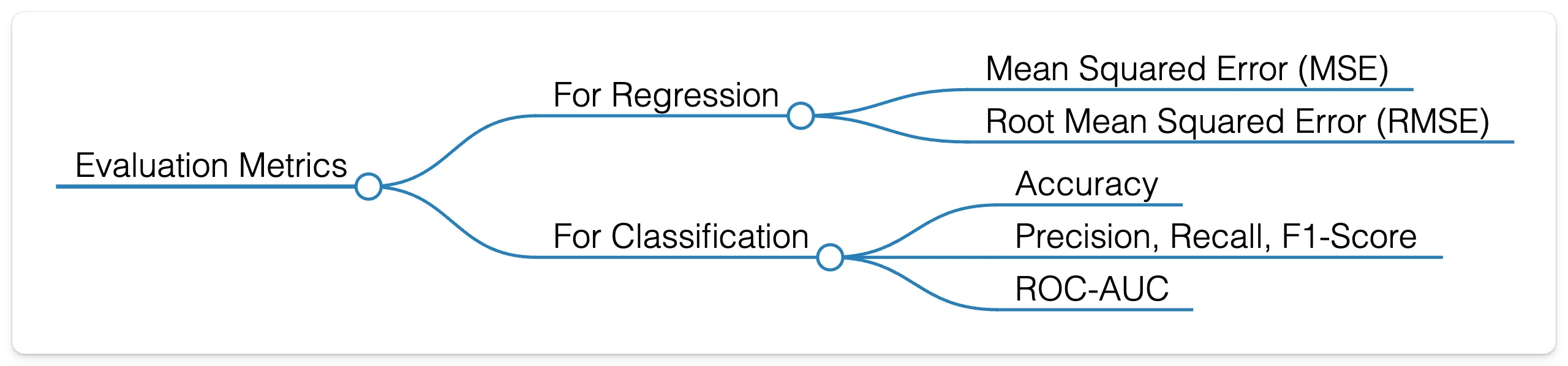

Evaluation Metrics

Evaluation Metrics provide a quantifiable measure of a model’s performance. For instance, Mean Squared Error (MSE) and Root Mean Squared Error (RMSE) are used in Regression to quantify the difference between predicted and actual values, guiding real estate companies in refining their pricing models for better accuracy.

Essential metrics in classification tasks include Accuracy, Precision, Recall, F1-Score, and ROC-AUC (Receiver Operating Characteristic & Area Under the ROC Curve). For example, in email spam detection, accuracy is critical to ensure most spam is caught while minimizing legitimate emails flagged as spam.

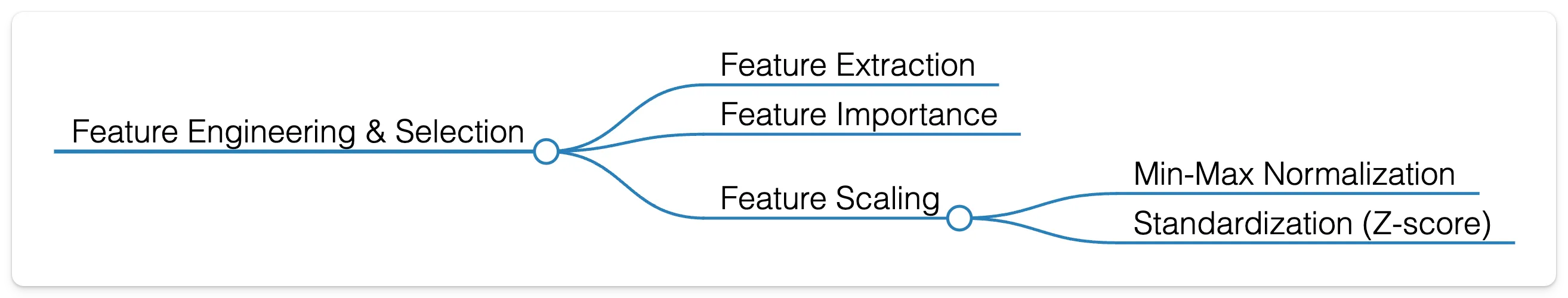

Feature Engineering and Selection

This process involves selecting the most informative data attributes and transforming them to enhance model performance.

Feature Extraction is used in text analysis to identify specific words or phrases as sentiment indicators. Feature Importance helps financial analysts pinpoint the key factors influencing stock market trends.

Feature Scaling, such as Min-Max Normalization and Standardization (Z-score), is crucial in preparing data for neural networks. It ensures the model treats all inputs uniformly.

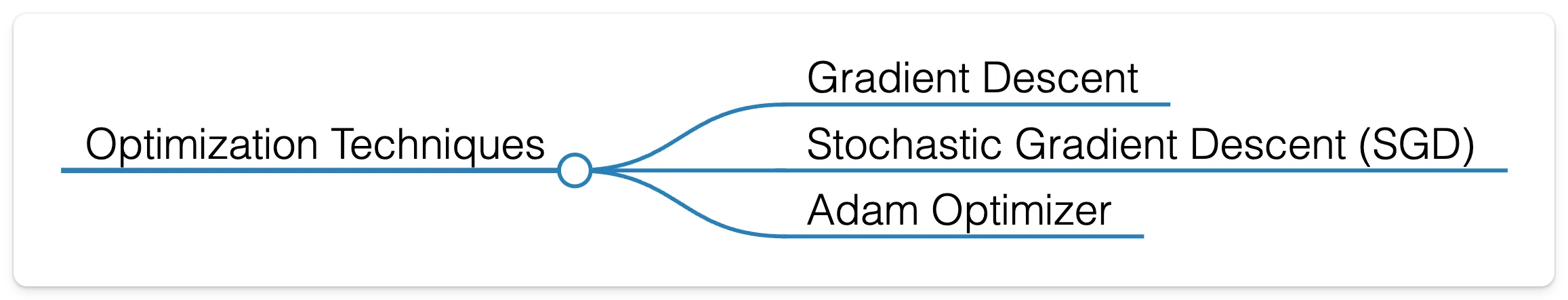

Optimization Techniques

Algorithms in Machine Learning are created to minimize or maximize a cost function, which helps to fine-tune the model’s parameters for optimal performance. Gradient Descent is one of the most widely used algorithms, which adjusts model parameters to reduce prediction errors.

Variations of Gradient Descent, such as Stochastic Gradient Descent (SGD) and Adam Optimizer, are more effective in complex models that predict consumer behaviour or optimize logistic operations, as they provide faster and more efficient methods to reach the optimal solution.

Tools of the Trade

Frameworks and libraries like TensorFlow, PyTorch, Scikit-learn, and Keras are essential for developing and deploying ML models, making advanced analytics accessible to everyone.

Summary

The versatility and power of Machine Learning lie in its capability to learn from data, establishing it as a cornerstone of modern AI applications. Whether you’re a budding enthusiast or a seasoned professional, understanding the foundational elements of ML unlocks a world of possibilities for innovation and discovery.

Frequently Asked Questions (FAQ)

Q: What are the essential learning algorithms in Machine Learning?

A: The essential learning algorithms in Machine Learning include supervised, unsupervised, and reinforcement learning algorithms, each catering to specific purposes in model training and prediction.

Q: What are some everyday tasks that Machine Learning models can solve?

A: Machine Learning models can solve tasks such as regression, classification, clustering, anomaly detection, and building recommendation systems, depending on the learning algorithms employed.

Q: What is a mind map in the context of Machine Learning?

A: In the context of Machine Learning, a mind map is a visual representation that organizes various concepts, tasks, algorithms, and foundational elements of Machine Learning, helping to structure and comprehend complex information effectively.

Q: How can I download a Machine Learning mind map in PDF format?

A: To download a mind map on Machine Learning in PDF format, please email admin@rdiachenko.com with the subject “RD Blog: ML Mind Map”.